- tl;dr sec

- Posts

- [tl;dr sec] #309 - Winning the AI Cyber Race, SAST at LinkedIn, Detection Engineering

[tl;dr sec] #309 - Winning the AI Cyber Race, SAST at LinkedIn, Detection Engineering

Why AI offense is beating defense and Verifiability is All You Need, how LinkedIn scales SAST to millions of LOC and 10k's of repos, atomic detection rules

Hey there,

I hope you’ve been doing well!

🏡 Family Time

This week I’ve been visiting my family in the Midwest, and despite the daily high temperature here being the low in San Francisco, so far I’ve managed to survive.

I recently realized I’ve developed a reputation in my family for bringing sketchy looking things on airplanes.

This time I brought two Ziploc bags of different protein powders so I could do a taste test with my brother. Fortunately, the TSA was not bothered by two unlabeled plastic bags of powder in my carry-on 😂

One thing in particular I’m planning to do while at home is interviewing my family on stories from their lives.

I’ve realized it’s so easy to lose details about my parents’ lives before I were born, or childhood stories my siblings have that are way different than what I remember.

I think I’ll collect the photos and stories (text, videos) in some combination of a Google Doc and Google Drive / iCloud folder that I’ll share with the whole family.

If you haven’t done this before, you should give it a try. Sharing stories is a nice way to bring people together and learn about how they view the world.

📚️ Also: tl;dr sec will be off for the next two weeks, resuming January 8th, 2026.

I hope you get some time to relax and spend time with loved ones over the holidays! 🎄

P.S. Apparently the hosting provider Daniel Miessler used for his slides for our webinar (on building your personal AI infrastructure) had low bandwidth and it caused a number of people to get an error when they tried to download the slides. Sorry! You can access his slides here, and again the video here.

Sponsor

📣 What if your vulnerability scanner only gave you true positives?

We gave James Berthoty, a leading analyst and ex-security engineer, access to Maze to test in his own AWS environment with no script and said, “hit record, we’ll share what you see.”

The result? Most CVEs look scary on paper, but even if the scanner picked it up, they couldn’t actually be exploited in the specific environment. Maze uses AI agents to investigate each vulnerability in context: runtime signals, network exposure, and real attack paths, just like your best security engineer would. If Maze says it’s exploitable, it’s a real problem backed by evidence.

This was cool, I like this format a lot: James pulled up his own AWS environment and reviewed a few findings in Maze’s UI, with no guidance from them. I like how the AI agents provide a bunch of context, and concrete reproduction details about what they did and what it means. Done well, this definitely saves a lot of time.

AppSec

The Fragile Lock: Novel Bypasses For SAML Authentication

Portswigger’s Zakhar Fedotkin shows “how to achieve a full authentication bypass in the Ruby and PHP SAML ecosystem by exploiting several parser-level inconsistencies: including attribute pollution, namespace confusion, and a new class of Void Canonicalization attacks. These techniques allow an attacker to completely bypass XML Signature validation while still presenting a perfectly valid SAML document to the application.” You can download the Burp Suite extension that automates the entire exploitation process from GitHub.

Fil-C

Fil-C is a memory-safe implementation of C and C++ that catches all memory safety errors as panics, using concurrent garbage collection and "InvisiCaps" (invisible capabilities) to check every potentially unsafe operation. The implementation maintains "fanatical compatibility" with existing C/C++ codebases, supporting advanced features like threads, atomics, exceptions, signal handling, longjmp/setjmp, and shared memory, with many open source programs including CPython, OpenSSH, GNU Emacs, and Wayland working without modification. It's possible to run a totally memory safe Linux userland.

The post Linux Sandboxes And Fil-C describes how to combine Fil-C's memory safety with Linux sandboxing techniques, specifically focusing on adapting OpenSSH's seccomp-BPF sandbox to work with Fil-C. See also the Chromium and Mozilla docs on how to do sandboxing on Linux using seccomp.

Modernizing LinkedIn’s Static Application Security Testing Capabilities

Great post by Emmanuel Law, Bakul Gupta, Francis Alexander, and Keshav Malik share how LinkedIn has scaled their SAST efforts across millions of lines of code and tens of thousands of repositories. They use CodeQL and Semgrep via GitHub Actions, require SARIF to be uploaded with no vulnerabilities above a security threshold before PRs can be merged, manage scanning config via a minimal “stub workflow” that calls a centralized SAST workflow (so the main SAST workflow can be more easily iterated on, vs pushing an updated workflow to every repo).

They also built a Drift Management System that runs daily and ensures every repo has the stub workflow and that it matches the latest version, automatically updating it if not. The post also discusses how they handle observability, customizing which rules run where, custom rules and remediation advice, and more.

💡 Great example of security engineering and thoughtfully designing a SAST program that works at scale. Solid read 👍️

Sponsor

📣 Supercharge Your SOC with AI, starting now

Grab Filip Stojkovski’s new eBook, An Implementation Guide for AI-Driven Security Operations. SOCs are ditching clunky, manual playbooks for agentic AI, and this field guide shows you exactly how to modernize without chaos.

Take a look to learn to:

Gauge SOC maturity and set an AI roadmap

Build the data foundation AI needs

Select platforms that actually deliver

Prove wins with KPIs and ROI

From greenfield to MDR to mature SOCs, use step-by-step playbooks to go from alert fatigue to AI speed.

I’ve been enjoying Filip’s writing, he really has his finger on the pulse of the AI + SOC/detection space. And Exaforce has been sharing some 👌 technical blog posts recently.

Cloud Security

Abusing AWS Systems Manager as a Covert C2 Channel

Atul Kishor Jaiswal describes how to abuse AWS Systems Manager's hybrid activation feature to establish a covert command and control (C2) channel on macOS and Windows systems using legitimate, Amazon-signed binaries. Basically you install the official SSM agent on the victim machine, which then communicates with AWS endpoints over HTTPS, providing attackers with file system access, process monitoring, shell command execution, and terminal sessions without triggering security alerts as the agents communicate exclusively with trusted AWS endpoints and require no open ports or custom malware. The post concludes with Mac and Windows-specific detection strategies.

💡 I expect to see more “Living off the Cloud” techniques like this.

Exploiting AWS IAM Eventual Consistency for Persistence

OFFENSAI's Eduard Agavriloae describes how AWS IAM's eventual consistency creates a ~4-second window where deleted access keys and other IAM changes remain valid, allowing attackers to maintain persistence even after credentials are supposedly revoked. An attacker can detect when their keys are deleted and quickly create new credentials or assume roles before the deletion fully propagates, maintaining persistence. Traditional remediation approaches like applying deny policies fail during this window, as attackers can detect and remove these restrictions before they take effect.

The most effective mitigation is applying Service Control Policies (SCPs) at the account level, which attackers can't modify, followed by waiting for the consistency window to close before proceeding with traditional remediation steps.

💡 Dude, this is pretty crazy 🤯 I’m sure this is going to happen in the wild now, if it wasn’t already.

Shifting left at enterprise scale: how we manage Cloudflare with Infrastructure as Code

Chase Catelli, Ryan Pesek, and Derek Pitts describe how the Cloudflare security team implemented a "shift left" approach to manage hundreds of internal production Cloudflare accounts using Infrastructure as Code (IaC) with Terraform, Atlantis, and a custom tool called tfstate-butler, that acts as a broker to securely store state files.

They enforce security baselines through Policy as Code using Open Policy Agent (OPA) and Rego, which automatically validates configurations during merge requests to catch issues before deployment. There are currently ~50 custom policies, for example: only @cloudflare.com emails are allowed to be used in an access policy. The team also developed a drift detection service to identify unauthorized dashboard changes and implemented cf-terraforming to simplify the onboarding of existing resources.

Blue Team

mandiant/gostringungarbler

By Mandiant: A Python command-line project to resolve all strings in Go binaries obfuscated by garble. The tool works by identifying decryption routines through regex patterns, emulating them with the unicorn emulator, and patching the binary with deobfuscated strings.

Field Manual #4: What are Atomic Detection Rules?

Friend of the newsletter Zack Allen continues his detection engineering series, this time discussing “atomic detection rules,” which are narrowly defined rules that detect activity at a point in time with little to no context. Zack uses David Bianco's Pyramid of Pain framework to illustrate how detection effectiveness increases as you move from simple indicators (IPs, domains) to more complex TTPs.

The post describes how atomic detections lacking environmental context generate false positives, using examples of AWS admin logins and C2 IP address alerting to show how single-value matching creates brittle rules that attackers can easily evade. As detection engineers add more context to rules, defender operational costs increase but false positive rates decrease, an ever present cost-benefit tradeoff when writing detection rules.

Redefining Detection Engineering: Part I

Tallis Jordan defines detection engineering as “the application of software engineering concepts to transform raw data into high-fidelity, actionable alerts that are measurable, manageable, and reliable through repeatable techniques, such as version control, testing, and optimization.” Not: just writing SIEM rules. Tallis outlines core principles including: the Generalizability Principle (behaviors over signatures), Assume Breach Mindset, Data-Driven Decision Making, Fail Fast/Learn Faster, and Signal vs. Noise Obsession. The post includes industry statistics from CardinalOps showing most organizations only cover 21% of MITRE ATT&CK techniques, 13% of rules broken, 60% of attacks undetected, and 80% of detections untested or misconfigured.

In Part 2, Tallis describes the day-to-day: the responsibilities, deliverables, and expectations of a detection engineer. Core responsibilities include: detection research and development, validation through test cases and adversary emulation, lifecycle management with git, implementation of CI/CD pipelines for Detection-as-Code, risk assessment based on business context, log source management, and cross-team collaboration.

Red Team

C2 Redirectors Made Easy

Clint Mueller (great name 👏) announces caddy-c2, a Caddy module that automatically parses C2 profiles and proxies legitimate C2 traffic without manually configuring redirect rules for User-Agents and HTTP endpoints. Putting redirectors in front of a C2 server obscures the actual location of the C2 server, allows only legitimate C2 traffic to reach the C2 server, and if the traffic is detected and blocked, the proxy can easily be destroyed and a new one deployed in it’s place, which is easier than re-deploying the C2 server.

Stillepost - Or: How to Proxy your C2s HTTP-Traffic through Chromium

dis0rder introduces stillepost, a tool that leverages Chrome DevTools Protocol (CDP) to proxy C2 traffic through legitimate browser processes, helping attackers blend in with normal web traffic. By spawning a headless Chromium browser with remote debugging enabled, connecting via WebSockets, and using the Runtime.evaluate method to execute JavaScript that makes XHR requests, the tool allows malware to send HTTP requests through the browser rather than directly from the implant. This technique benefits from the browser's existing proxy configuration, firewall rules, and expected network behavior, though it's limited to servers that allow CORS requests from arbitrary origins.

Singularity: Deep Dive into a Modern Stealth Linux Kernel Rootkit

MatheuZ describes Singularity, a Loadable Kernel Module (LKM) rootkit developed for modern Linux kernels (6.x) with advanced evasion and persistence techniques. Singularity uses ftrace for syscall hooking instead of directly modifying the System Call Table, implements multi-layered process hiding through PID tracking and directory listing filtering (/proc), conceals files and network connections, provides privilege escalation vectors via signals and environment variables, sanitizes kernel logs to remove evidence of its presence, and includes anti-forensic techniques to prevent detection by both 64-bit and 32-bit tools.

💡 Great overview of a ton of different places and ways to detect and hide on Linux.

AI + Security

Winning the AI Cyber Race: Verifiability is All You Need

Sysdig CISO Sergej Epp argues that AI is transforming offensive security faster than defense because offensive tasks have built-in binary verifiers (shell popped? exploit worked? root access?) while defensive tasks like SIEM analysis, GRC, and forensics lack mechanical ground truth, leading to noise and low precision. “Agents + Oracles = The Unlock.” “Who owns the verifiers wins the AI race.”

Sergej contrasts Google's Sec-Gemini (~12% precision on forensic timeline analysis) with Microsoft's Project Ire (98% precision on Windows driver classification) to show that wrapping LLMs with proper tool scaffolding (sandboxes, decompilers like Ghidra and angr, and validator agents) is what drives results, not raw model capability.

The path forward for defense is engineering six classes of mechanical verifiers: canary verifiers (honeytokens), provenance proofs (SLSA, SBOMs), replay harnesses for detection rules, policy-as-code (Rego, OPA, Checkov), sandboxing and dynamic analysis, and graph-based verifiers (attack graphs, reachability analysis, cloud security posture). Security leaders should demand vendors explain their mechanical verification methods, adopt continuous AI red teaming to expose verifier gaps, and build realistic cyber gyms since LLMs can't generalize on private enterprise data they've never seen. See also Sergej’s BSidesFrankfurt 2025 keynote on this topic.

💡 This post is 🔥, highly recommend. The importance of verifiability is a key insight, I’ve been thinking about this for awhile. I’m documenting this prediction here so I can point to it in the future.

Prediction: AI will make formal verification go mainstream

Martin Kleppmann predicts that AI will make formal verification mainstream by dramatically reducing the cost and expertise required to write proof scripts for tools like Rocq, Isabelle, Lean, F*, and Agda. He argues that LLMs are well-suited for writing proofs because invalid proofs will be rejected by verified proof checkers, and that formal verification will let us ship AI-generated code quicker with confidence, reducing the need for human review (which could become the bottleneck). Martin believes the challenge will shift from writing proofs to correctly defining specifications for your software.

💡 I think this is a good argument- formal verification proofs are hard to write and require expertise, but LLMs can potentially churn them out quickly/better than humans at scale (like unit tests), and with the proof checkers we have an oracle that can automatically return if the proof is valid.

The difficulty is going to be actually specifying what the system should do in sufficient detail, and for most companies and products, unlike what academia works on, these are rapidly built, quickly iterated on applications that are being shipped to the user ASAP, so I somewhat doubt if more than like 1% of software could be described by its creators in specification-level detail. That said, this could be huge for OS kernels, parsing libraries (leverage the RFC for that format as the spec), cryptographic libraries, and compilers, which could reduce or eliminate many serious vulnerabilities. Excited to see more in this space!

Escaping Isla Nublar: Coming around to LLMs for Formal Methods

Galois intern Twain Byrnes describes building CNnotator, a command-line tool that uses LLMs to automatically synthesize annotations describing memory use for each function in a C project. Instead of trying to jump directly from C to Rust, CNnotator figures out the memory shape of the original C program, which should hopefully make translating to safe Rust much easier. The LLM-generated candidate annotation is tested using CN’s property based testing capability, which functions as an oracle for “is this annotation correct?”

See also symbolic methods of translating C to (unsafe, unidiomatic) Rust, like C2Rust, Corrode, and Citrus, and the paper C2SaferRust, which combines C2Rust, LLMs, and formal methods.

Claude Can (Sometimes) Prove It

Galois’ Mike Dodds found Claude Code surprisingly good at interactive theorem proving (ITP), a traditionally difficult formal methods approach. Mike used Claude Code to formalize a complex concurrent programming paper in Lean, producing 2,535 lines of code with minimal human intervention, though the agent sometimes required guidance through challenging proofs, struggled with parsing errors, and occasionally made deep conceptual mistakes that were difficult to correct.

“What I’ve found is that given a tool that can detect mistakes, the agent can often correct them…we may want to design tools differently for AI agents rather than human use. Instead of trying to avoid failures and only output highly processed information that a human can digest, we may want to be more strict but produce more information about what’s going wrong.”

“As long as I’ve been in the field, automated reasoning has evolved slowly. We are used to small-% improvements achieved through clever, careful work. Claude Code breaks that pattern. Even with the limitations it has today, it can do things that seemed utterly out of reach even a year ago. Even more surprising, this capability doesn’t come from some fancy solver or novel algorithm; Claude Code wasn’t designed for theorem proving at all!

I think what Claude Code really points to is the bitter lesson coming for formal methods, just as it did for image recognition, language translation, and program synthesis. Just as in those fields, in the long run I think formal methods ideas will be essential in making AI-driven proof successful. That’s because AIs will need many of the same tools as humans do to decompose, analyze, and debug proofs. But before that happens, I think we will see much of the clever, careful work we esteem rendered obsolete by AI-driven tools that have no particular domain expertise.”

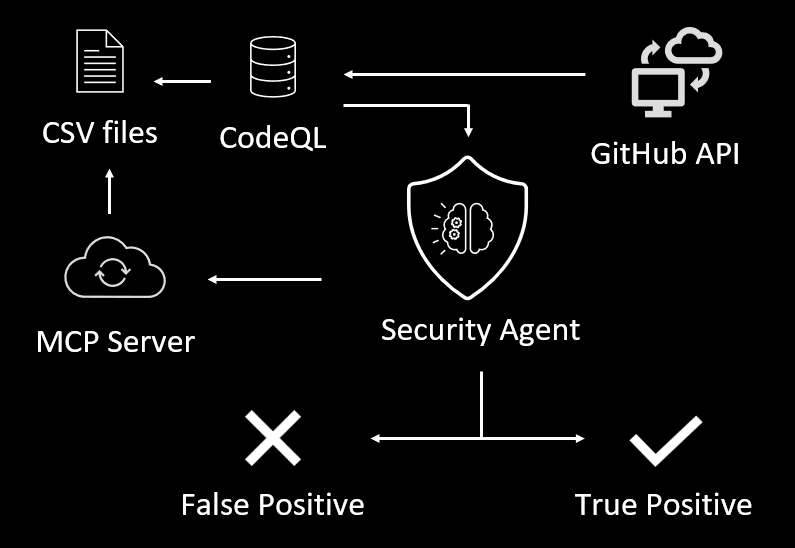

Vulnhalla: Picking the true vulnerabilities from the CodeQL haystack

CyberArk’s Simcha Kosman introduces Vulnhalla (BlackHat EU 2025 abstract and slides here), a tool that uses LLMs to triage static analysis (CodeQL in this case) findings to reduce false positives while vulnerability hunting. Using this process, Simcha found 7 CVEs in projects such as the Linux Kernel and ffmpeg in 2 days for under $80.

The unique methodology parts here are:

Pre-extracting code context using CodeQL into CSV files (start/end lines of code for functions, etc.) which can then be read by the LLM triage process to pull in relevant context in order for it to make a true positive/false positive decision.

Using “Guided Questions” that force the LLM to reason step-by-step about data flow and control flow like an experienced security researcher.

Where is the source buffer declared? What size does it have? Does this size ever change? Where is the destination buffer declared? Are these buffers assigned from another location? What conditions or operations affect them?

→ Then after answering these give your verdict.

💡 We’ve already seen blogs from Sean Heelan and Caleb Gross which also use CodeQL + LLMs for triaging results. One methodology note: Simcha’s post seems to assume that any finding marked by the security agent as a FP is a FP, but in reality the agent will be wrong some percent of the time. It’d be nice to have some human ground truth analysis of the TP/FP rate of the security agent itself.

Misc

Fame

Influencers are royalty at this college, and the turf war is vicious - College, you know, that place people go to learn 🫠

charli xcx - The realities of being a pop star - Fascinating and candid.

Misc

Cimorelli - KPop Demon Hunters - Golden (Harmony Loop Cover)

TikTok unlawfully tracks your shopping habits – and your use of dating apps

Hack Reveals the a16z-Backed Phone Farm Flooding TikTok With AI Influencers - “Doublespeed uses a phone farm to manage at least hundreds of AI-generated social media accounts and promote products. The hack reveals what products the AI-generated accounts are promoting, often without the required disclosure that these are advertisements, and allowed the hacker to take control of more than 1,000 smartphones that power the company.”

AI

Meta’s New A.I. Superstars Are Chafing Against the Rest of the Company

What OpenAI Did When ChatGPT Users Lost Touch With Reality - “Some of the people most vulnerable to the chatbot’s unceasing validation, they say, were those prone to delusional thinking, which studies have suggested could include 5 to 15 percent of the population.”

Andrej Karpathy tweet on the implications of AI to schools

The Economist - How HR took over the world - The profession has rocketed in size and stature. Will AI shrink it?

American remote-work cities show signs of strain - More people working from home → fewer people going downtown, fewer office leases → less tax revenue.

From Vibe Coding To Vibe Engineering – Kitze - Very fun talk, lots of memes.

Lee Robinson describes how he migrated cursor.com from a CMS to raw code and Markdown. He estimated it would take a few weeks, but was able to finish the migration in three days with $260 in tokens and hundreds of agents.

What sticks out to me: a) there’s so much value in having agents be able to quickly read/understand/edit things, that abstractions that make this more difficult are potentially not worth it. b) A lot of SaaS apps / software in general is going to need to fight for their money/customers vs just having customers re-build it in house. Long term in-house maintenance costs might be high though.

“The cost of abstractions with AI is very high. Over abstraction was always annoying and a code smell but now there’s an easy solution: spend tokens. It was well worth the money to delete complexity from our codebase and it already paid for itself.”

Thoughtful response from Sanity, the CMS cursor migrated from, on the nuances of CMS systems and what some of the complexity supports.

✉️ Wrapping Up

Have questions, comments, or feedback? Just reply directly, I’d love to hear from you.

If you find this newsletter useful and know other people who would too, I'd really appreciate if you'd forward it to them 🙏

Thanks for reading!

Cheers,

Clint

P.S. Feel free to connect with me on LinkedIn 👋