- tl;dr sec

- Posts

- [tl;dr sec] #304 - OpenAI & DeepMind's AI Security Agents, OSS Malware Database, How Figma Detects Sensitive Data Exposure

[tl;dr sec] #304 - OpenAI & DeepMind's AI Security Agents, OSS Malware Database, How Figma Detects Sensitive Data Exposure

Aardvark and CodeMender autonomously find/fix vulnerabilities, open database for tracking malicious open-source packages, how Figma finds authorization issues at scale

Hey there,

I hope you’ve been doing well!

🎃 Life Updates

After leaving a colleague’s farewell happy hour, I realized on BART that someone had dripped significant guacamole on my backpack (which was tucked under a counter), which was now smeared on me. But being covered in green on the way to a Halloween karaoke just made me more festive 😆

I dressed up as a “sexy cop” for Halloween, as what’s the point of working out if you can’t flaunt it at least once a year.

Non HR-approved advice: I think you can always say to a male colleague, “Hey, have you been working out?”

This makes me remember the time I was a consultant at NCC Group, during I think my first on-site project, when one day the customer security team gathered together and said something like, “It’s push-up time.” So we all got in a circle and cranked out push-ups in the midst of the desks, including doing a “waterfall,” where you had to keep doing push-ups until the person to the left of you stopped, around the circle. Not exactly what I expected from security consulting, but delightful 😂 And one of my teammates on that project left security to do acroyoga full time, true story.

By the way, I wrote extra details and commentary for a number of links but made them only visible on the web version of this issue to make the email shorter.

P.S. Semgrep’s Security Research team is hiring for an Engineering Manager, security researchers, and more.

Sponsor

📣 7 Security Best Practices for MCP

Learn what security teams are doing to protect MCP without slowing innovation.

As MCP (Model Context Protocol) becomes the standard for connecting LLMs to tools and data, security teams are moving fast to keep these new services safe.

The MCP Security Best Practices Cheat Sheet outlines seven proven steps teams can put in place right away, including:

How to lock down MCP servers and supply chains

Enforcing least-privilege access for tokens and tools

Adding human-in-the-loop safeguards for critical actions

If you’re starting to see MCP show up in your environment, this is a great place to start.

MCPs are all the hotness these days, but locking them down is the wild west. Great to have a cheat sheet of how to lock down/assess the MCPs your devs are probably using.

AppSec

GMSGadget

By Kevin Mizu: GMSGadget (Give Me a Script Gadget) is a collection of JavaScript gadgets that can be used to bypass XSS mitigations such as Content Security Policy (CSP) and HTML sanitizers like DOMPurify.

Two Scenario Threat Modeling

To keep from getting paralyzed by trying to enumerate all possible threats, Jacob Kaplan-Moss recommends "Two-Scenario Threat Modeling," which focuses on developing just two specific, detailed narrative scenarios: a worst-case scenario (the existential, high impact thing that keeps you up at night with potentially lower likelihood) and a most-likely scenario with tangible impact (higher likelihood with meaningful consequences).

“Scenario-based planning tends to work really well because human beings are great at telling and remembering stories. It’s easy for us to keep our risk scenarios in mind — far easier than remembering some complex threat model or risk plan or attack tree.”

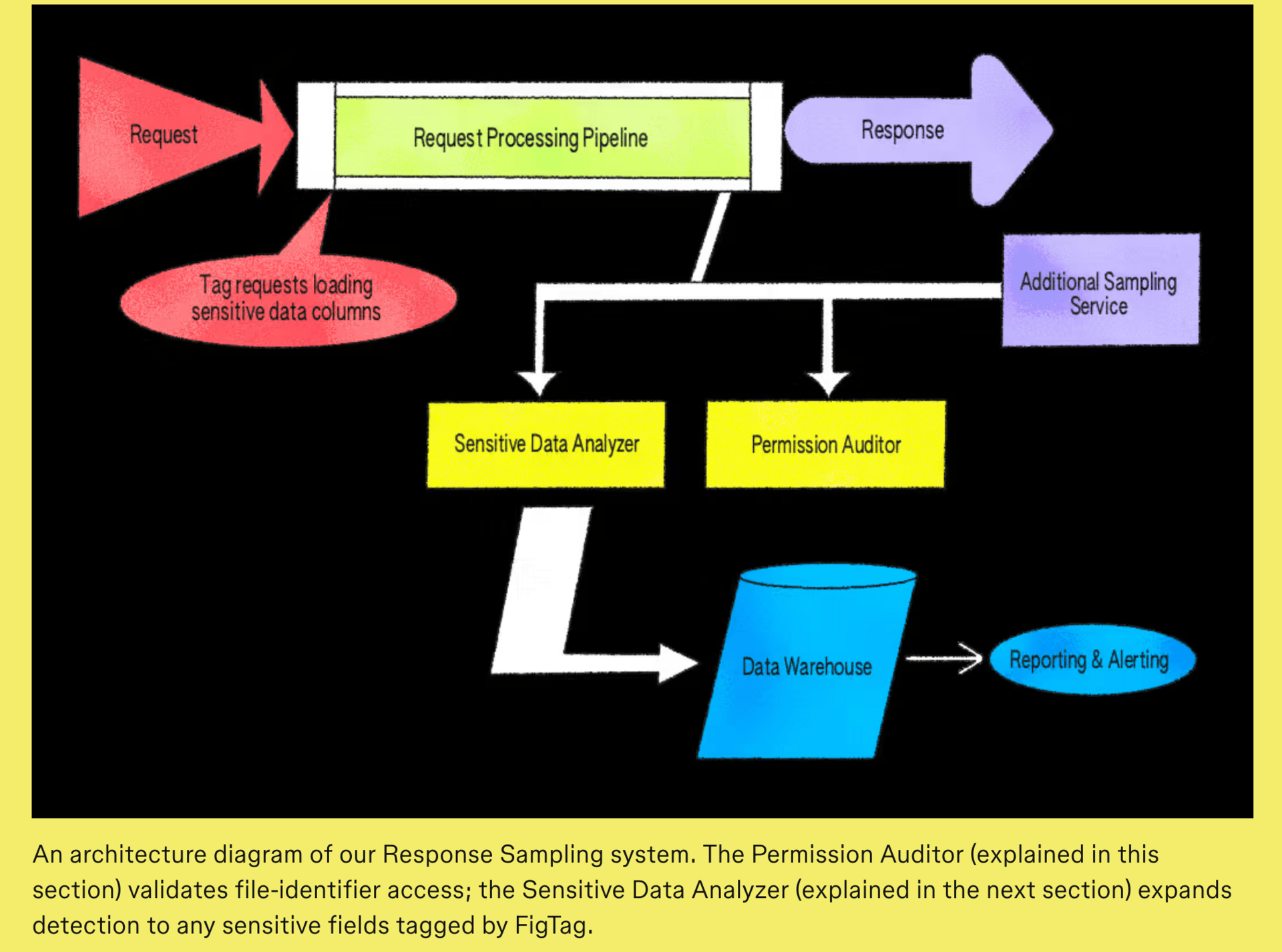

Visibility at Scale: How Figma Detects Sensitive Data Exposure

Dave Martin describes how Figma's security team built "Response Sampling," a real-time monitoring system (implemented as middleware in their Ruby app server) that detects sensitive data exposure by sampling API responses and validating proper authorization. This approach allowed them to effectively identify subtle authorization issues.

The system initially focused on file identifiers (the unique tokens embedded in each file’s URL that link it to specific access controls) before expanding to all sensitive data through integration with FigTag (their data categorization tool). FigTag works by annotating every database column with a category that describes its sensitivity and intended usage, which they can then use to determine if any banned_from_clients fields are returned to clients.

“We approached this problem with a platform-security mindset—treating our application surfaces like infrastructure and layering continuous monitoring and detection controls on top.”

💡 Lots of cool security engineering details in this post, highly recommend reading. From the initial choice of where to start (file identifiers) to how they expanded to other sensitive info types, as well as how they minimized performance impact while still getting security coverage (sampling + asynchronous workflows), to architecture choices, some good stuff here 👍️

Sponsor

📣 The new frontier of access isn’t human - it’s agentic

AI systems, copilots, and automation agents now touch production data, execute code, and make security-critical decisions every second.

They act faster than humans, scale without friction, and often operate outside traditional identity and access controls.

This is the next challenge in privileged access management.

Formal helps govern privileged access management for humans, machine users, and AI agents with the same precision and accountability. We provide deep protocol-level context to provide visibility on every query, command, and action taken on your data that power real time data controls.

How Formal works is cool - protocol aware connectors embedded directly in the data path (supports databases, APIs, storage systems, SSH + Kubernetes sessions). And it can alias/redact data as it flows into AI models (eg. PII, secrets, …) with the same approach 👍️

Supply Chain

OpenSourceMalware

By Paul McCarty: An open database for tracking malicious open-source packages, GitHub repos, CDNs, and domains. It allows searching and filtering across >70,000 malicious packages across NPM, PyPi, Maven, Nuget, etc. H/T Thomas Roccia for sharing.

Learnings from recent npm supply chain compromises

Datadog’s Kennedy Toomey gives an overview of three recent large-scale npm supply chain attacks (s1ngularity, debug/chalk, and Shai-Hulud) that compromised over 500 packages through GitHub Actions vulnerabilities, phishing campaigns, and unrotated tokens. The post covers key learnings (attack vectors, targets), retrospective (what went wrong/right), and how we can improve for next time.

Supply chain attacks are exploiting our assumptions

Trail of Bits’ Brad Swain breaks down the trust assumptions that make the software supply chain vulnerable, analyzes recent attacks that exploit them (typosquatting, dependency confusion, stolen secrets, poisoned pipelines, malicious maintainers), and highlights some of the defenses being built across ecosystems to turn implicit trust into explicit, verifiable guarantees.

For typosquatting, you can use TypoGard and Typomania, Zizmor for static analysis of GitHub Actions, Trusted Publishing for PyPI and Homebrew build provenance to know you’re running the code you expect, or Capslock to statically identify the capabilities of a Go program (e.g. reads the filesystem, makes network requests).

Sponsored Tool

📣 Don't make managers approve access requests, and other lessons for scaling access

Manual access approvals don't scale — and getting manager approvals doesn’t make you more secure.

Our research reveals how modern IT and security teams actually manage access:

Automate requirements instead of reviewing every ticket

Let app owners make their own access decisions

Enable self-service access for low-risk and role-appropriate requests

👉 Read the report 👈

Friends don’t let friends burn out on manual access approvals 🙏

Blue Team

Sam0rai/guilty-as-yara

A Rust-based tool that generates safe Windows PE executables containing specific data patterns to trigger YARA rule matches, helping you validate malware detection signatures. (Think EICAR generation tool for YARA rules) The tool parses YARA rules to extract string literals and hex patterns, then embeds these patterns in both executable and data sections of a safely-crafted PE file that immediately exits when run.

ATT&CK v18: The Detection Overhaul You’ve Been Waiting For

MITRE’s Amy Robertson describes ATT&CK v18 updates, including: two new objects (Detection Strategies and Analytics) that shift single-sentence notes into structured, behavior-focused guidance for defenders. The update expands coverage across domains with new techniques for modern infrastructure (Kubernetes, CI/CD pipelines, cloud databases), adds six new threat groups, 29 software tools, and five campaigns documenting supply chain compromises and identity-based attacks, and a whole lot more.

CyberSlop — meet the new threat actor, MIT and Safe Security

Have you ever critiqued a report so hard it was retracted? 🔥 If you haven’t, you’re not Kevin Beaumont, who wrote a scathing review of an MIT working paper that claimed 80% of ransomware attacks are using Generative AI. The paper is credited to MIT’s Michael Siegel and Sander Zeijlemaker, and Vidit Baxi and Sharavanan Raajah at Safe Security (Michael Siegel sits on the technical advisory board of Safe Security 🤝 ).

Kevin started posting about this paper and it was… taken down, blog headlines rewritten, and the narrative removed with no note that anything was changed. TL;DR: real-world incident responders consistently find traditional attack vectors like credential theft and unpatched vulnerabilities drive actual breaches (not AI).

“I intent to use CyberSlop to call out indicators of compromise, where people at organisations are using their privilege to harvest wealth through cyberslop. I am hoping this encourages organisations publishing research to take a healthier view around how they talk about risk, by imposing cost on bad behaviour.”

Red Team

loosehose/SilentButDeadly

By Ryan Framiñán: A network communication blocker specifically designed to neutralize EDR/AV software by preventing their cloud connectivity using Windows Filtering Platform (WFP). This version focuses solely on network isolation without process termination. Blog post with more details.

Creating a "Two-Face" Rust binary on Linux

Synacktiv’s Maxime Desbrus describes how to create a "Two-Face" binary (GitHub PoC) that selectively executes different code based on the target machine's unique characteristics, making targeted malware delivery more stealthy. The technique encrypts a malicious payload using a key derived from something unique to the target (in this case, the target's unique disk partition UUIDs), ensuring the malware only decrypts and executes on the intended system.

The post includes some other anti-analysis techniques as well, such as using memfd_create to avoid writing decrypted code to disk, writing the decrypted “hidden” program ELF data using io_uring or mmap to minimize detection, etc.

Look At This Photograph - Passively Downloading Malware Payloads Via Image Caching

Whoa, some clever shenanigans in this post 🤯 Marcus Hutchins describes how attackers can use cache smuggling to get malicious code onto a victim’s machine by embedding it in “image” files that are then cached by the browser. The loader then extracts the second-stage payload from the browser’s cache (which allows it to be longer than if it was just a malicious command placed into the victim’s clipboard). This approach evades many security controls that focus on restricting untrusted code’s ability to access the Internet.

Marcus also discusses how using Exif allows you store longer payloads, putting your payload after a null byte to evade some scanning, and how Outlook preemptively caches and downloads images attachments, even when image previewing is disabled, making it possible to use Exif Smugging to download a payload onto the target’s system without them even having to open the email. 🫠 PoC on GitHub here.

“Just set the HTTP Content-Type header to image/jpeg, embed an exe file using <img src="/malware.exe">, and the web browser will happily save the file to disk for you.”

AI + Security

The “anti prompt” technique

Clever idea by friend of the newsletter Fredrik STÖK Alexandersson: when you’re able to leak a system prompt, take its deny rules/guardrail instructions and feed them into a new LLM chat, and ask it to turn the prompt into its counter opposite. When you feed this “anti prompt” back into the original target, it often gives you a full set of instructions on how to use and call the tools/apps it has access to instead of ”never talk to the user about it.”

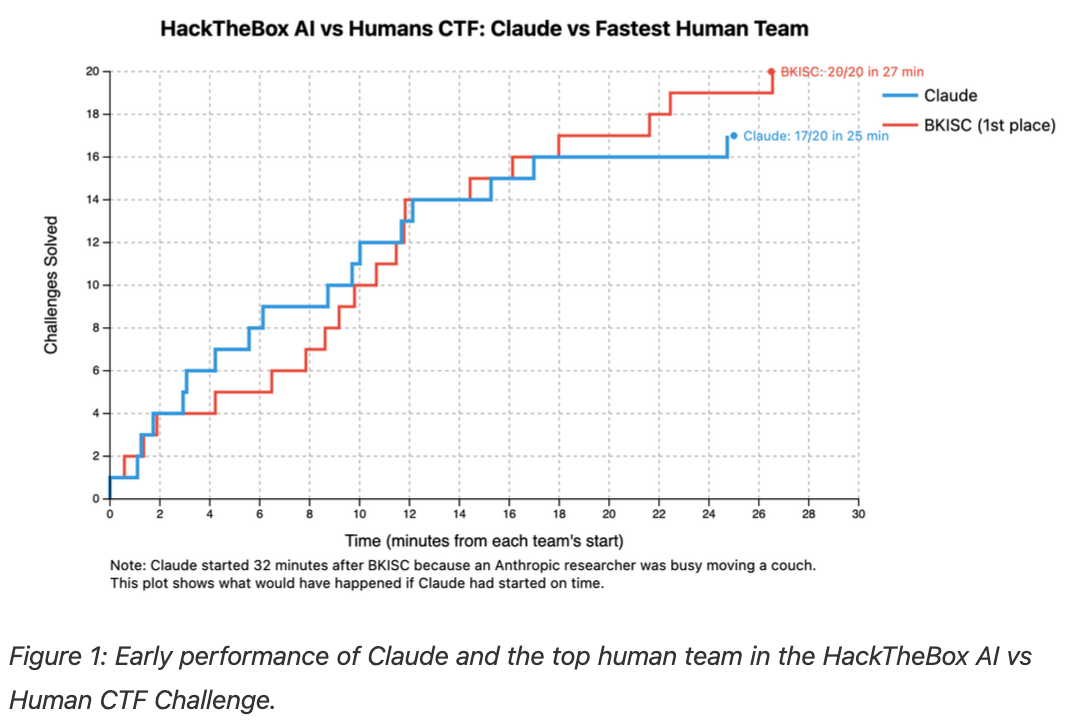

Claude is competitive with humans in (some) cyber competitions

Anthropic shares results from entering Claude in seven cybersecurity competitions throughout 2025, where it often placed in the top 25% but lagged behind elite human teams on the toughest challenges. They found Claude excels at solving simpler challenges quickly, for example, solving 13 of 30 challenges in 60 minutes at the Airbnb competition, but it only solved two more over the next two days for a total of 15/30 solved challenges, placing 39th/180 teams. In general, it seems like Claude solves challenges quickly or not at all.

Other findings: Claude performs better with access to tools like on Kali Linux, and can operate autonomously, but struggles with context window limitations during long competitions and fails in unique ways (e.g. overwhelmed by ASCII fish animations or descending into philosophical ruminations). See also Anthropic’s Keane Lucas’ DEF CON 33 talk.

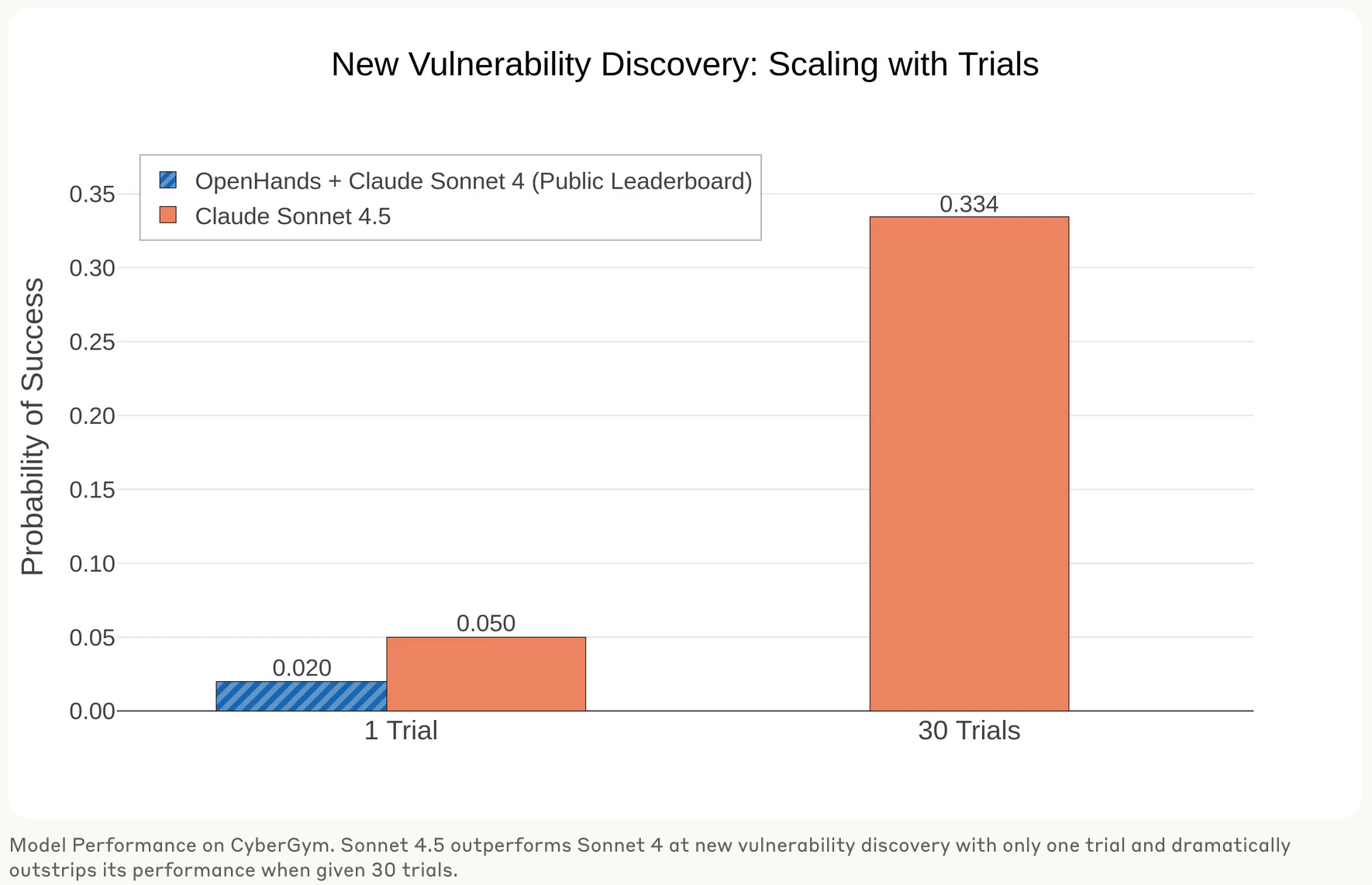

Building AI for cyber defenders

Anthropic’s research shows Claude Sonnet 4.5 matches or exceeds their frontier Opus 4.1 model in vulnerability detection and remediation despite being faster and less expensive. Their evaluations show big improvements on benchmarks like Cybench (76.5% success rate with 10 attempts, double the rate from six months ago) and CyberGym (achieves a new state-of-the-art score of 28.9% within the $2 LLM API limit per vulnerability, discovers new vulnerabilities in 33% of projects with 30 trials).

Re: Cybench: “Sonnet 4.5 achieves a higher probability of success given one attempt per task than Opus 4.1 when given ten attempts per task.”

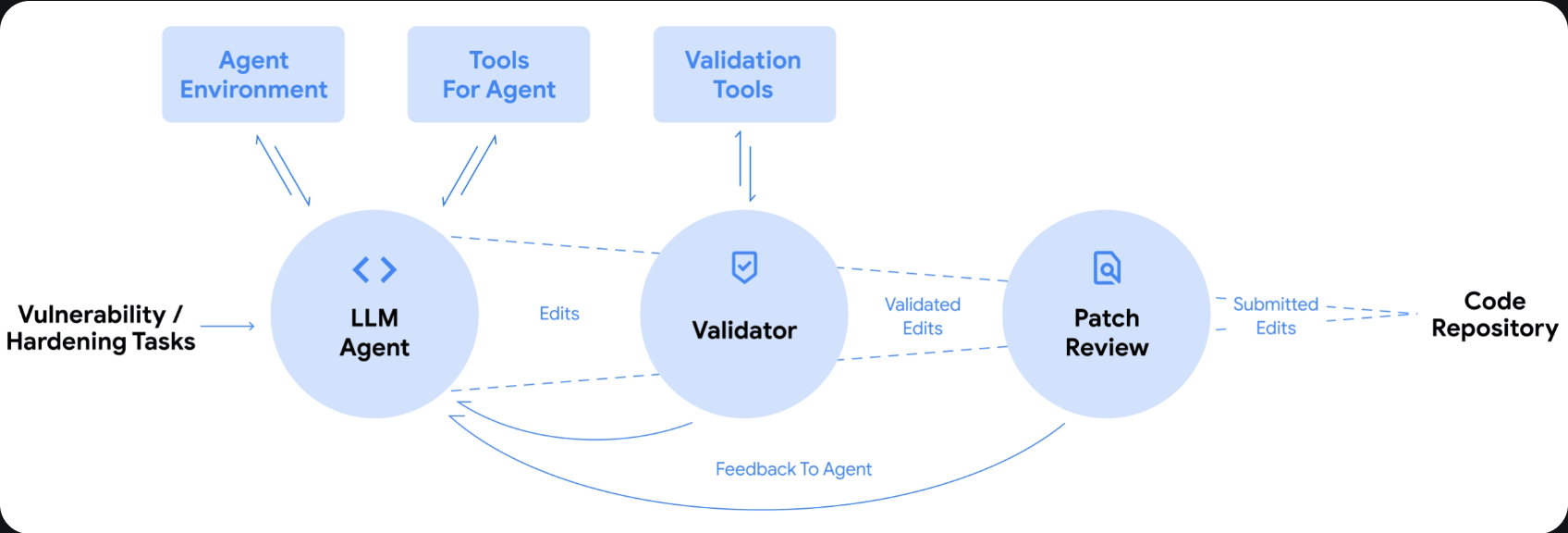

Introducing CodeMender: an AI agent for code security

Google’s Raluca Ada Popa and Four Flynn introduce CodeMender, an AI agent that can automatically find and fix vulnerabilities as well as proactively rewrite existing code to use more secure data structures and APIs. CodeMender has already upstreamed 72 security fixes to open source projects, and will be gradually rolled out to maintainers of critical open source projects.

CodeMender uses a debugger, source code browser, and other tools to pinpoint root causes and devise patches. It has access to advanced program analysis tools, including static analysis, dynamic analysis, differential testing, fuzzing and SMT solvers, helping it analyze control flow and data flow, to better identify the root causes of security flaws and architectural weaknesses.

CodeMender’s agentic flow also includes self-critiques, which helps ensure the suggested patch doesn’t break existing functionality, and helps it troubleshoot errors and test failures. CodeMender was able to find and fix vulnerabilities including complex cases like heap buffer overflows and object lifetime issues in codebases as large as 4.5 million lines of code.

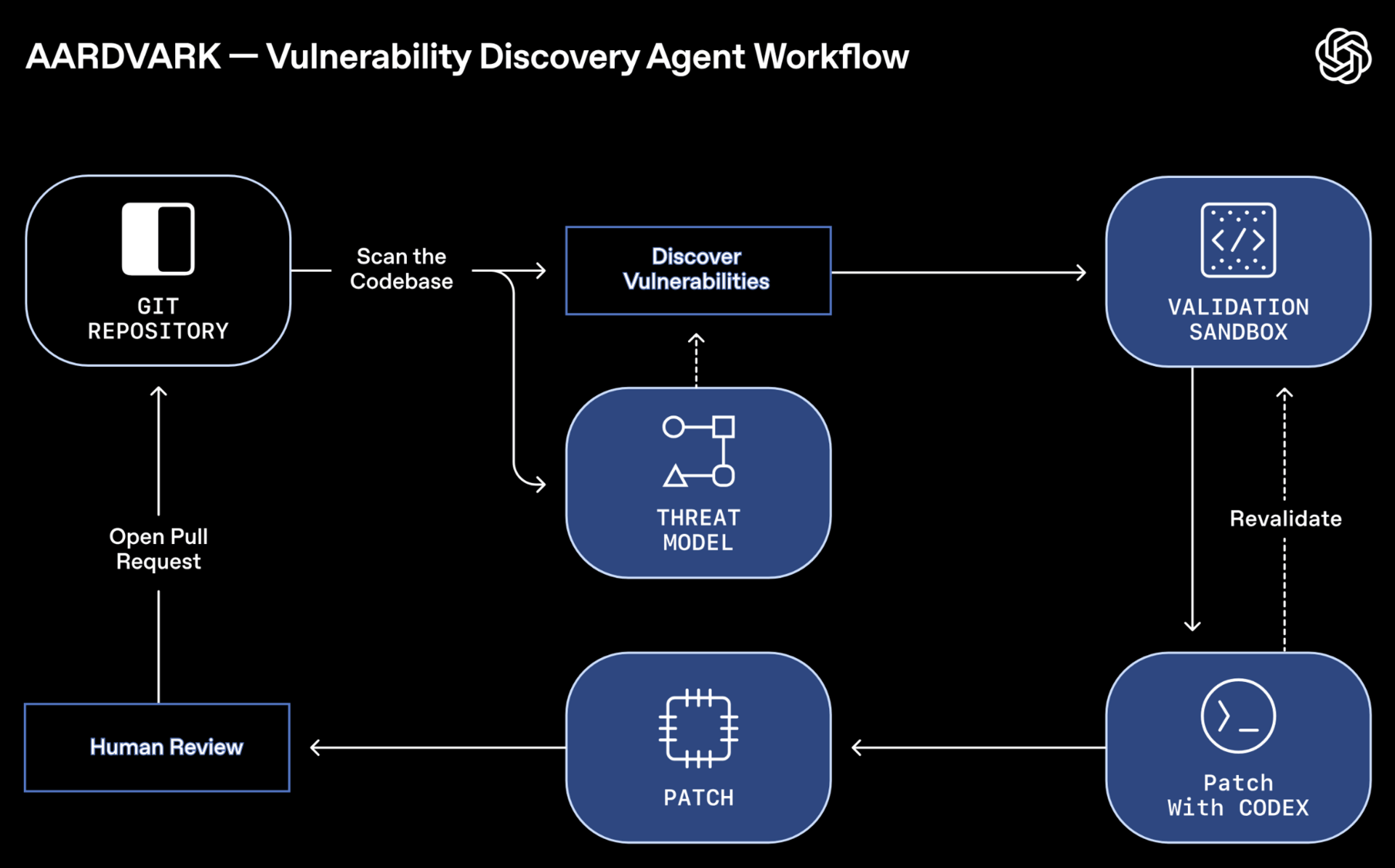

Introducing Aardvark: OpenAI’s agentic security researcher

Dave Aitel, Ian Brelinsky et al announce Aardvark, an agentic security researcher powered by GPT‑5 that monitors commits and changes to codebases, identifies vulnerabilities and how they might be exploited, and proposes fixes. Aardvark doesn't rely on fuzzing or software composition analysis, it uses LLM-powered reasoning and tool-use. In benchmark testing, Aardvark identified 92% of known and synthetically-introduced vulnerabilities, and has found 10 CVEs so far.

Aardvark's multi-stage pipeline:

Analysis: It begins by analyzing the full repo to produce a threat model so it understands the project’s security objectives and design.

Commit scanning: When a repository is first connected, Aardvark does a full scan to identify existing issues. It then scans commit-level changes against the entire repo and threat model as new code is committed.

Validation: Once Aardvark has identified a potential vulnerability, it attempts to trigger it in an isolated, sandboxed environment to confirm its exploitability.

Patching: Aardvark integrates with OpenAI Codex to help fix vulnerabilities, attaching a Codex-generated and Aardvark-scanned patch to each finding for human review.

💡Aardvark works overall basically how you’d expect it to. To me the interesting parts are: 1) it builds a threat model of the repo and uses that when looking for bugs, and 2) it attempts to trigger vulnerabilities in a sandbox environment (Codex?) to confirm exploitability. Lots of things we don’t know yet, like:

Does it work better/worse on specific languages or frameworks?

Is it using static analysis tools (e.g. parsing the code, resolving types, etc.) or is it only relying on GPT-5 to read and navigate code? (and much more)

Misc

Career

@13yearoldvc: You have 12 shots in life

@13yearoldvc: “The Windsurf buyout plot twist ($200k max/employee) proves AI's current brutal reality. It's boom time for tiny teams and giants, mid size startups should be worried.”

The Pmarca Blog Archives - Select Marc Andreessen posts from 2007-2009

r/cybersecurity - What Cyber conferences are actually useful?

Jeff Su - The Productivity System I Taught to 6,642 Googlers - “Capture everything immediately (Google Tasks, Keep), Organize with minimal friction, Review during scheduled sessions (30min beginning/end of day), and Engage by blocking time to execute.”

AI

Every - The Secrets of Claude Code From the Engineers Who Built It - Great Dan Shipper interview with Claude Code’s creators—Cat Wu and Boris Cherny

Business

Alex Hormozi - If I Wanted to Create a Business That Runs Itself, Here's What I'd Do

Tiago Forte - From 80-Hour Weeks to Business Autopilot: The 5 Stages of AI Integration

FoundersPodcast - How Elon Works

Tech

How I Reversed Amazon's Kindle Web Obfuscation Because Their App Sucked - To prevent scraping, Amazon's Kindle Cloud Reader does crazy stuff, like having a randomized glyph mapping for characters that changes every five pages, fake font hints designed to break parsers, and multiple font variants. Solution: render SVG glyphs as images and use perceptual hashing with Structural Similarity Index (SSIM) for character matching. Insane.

roon tweet about the optimism of Silicon Valley, AI, and creating a better future - “the technology companies have a civilization building instinct. they style themselves as great world leaders, create book publishing arms of their payment processors, fund large studies about basic income (suggesting at least a curiosity in responsibly restructuring civilization), create dozens of strange red lines in the sand about which business practices they find distasteful.”

CAForever submitted detailed plans for the next great American city, an hour north of Silicon Valley, including: Solano Foundry, America’s largest manufacturing park, Solano Shipyard, our largest shipyard, and walkable neighborhoods for 400,000 Californians.

LiveOverflow’s Web Security vs Binary Exploitation meme - I included it previously, but it’s just so good 😂

✉️ Wrapping Up

Have questions, comments, or feedback? Just reply directly, I’d love to hear from you.

If you find this newsletter useful and know other people who would too, I'd really appreciate if you'd forward it to them 🙏

Thanks for reading!

Cheers,

Clint

P.S. Feel free to connect with me on LinkedIn 👋